Designing Energy-Efficient Convolutional Neural Networks

using Energy-Aware Pruning

IEEE CVPR 2017

- Tien-Ju Yang MIT

- Yu-Hsin Chen MIT

- Vivienne Sze MIT

Email: dnn-energy at mit dot edu

Welcome to the DNN Energy Estimation Website!

- A summary of all related papers can be found here. Other related websites and resources can be found here.

- Follow @eems_mit or subscribe to our mailing list for updates on the Eyeriss Project.

- To find out more about other on-going research in the Energy-Efficient Multimedia Systems (EEMS) group at MIT, please go here.

News

- 04/17/2018

New paper on "NetAdapt: Platform-Aware Neural Network Adaptation for Mobile Applications" will be presented at ECCV 2018. Available on arXiv. [ PDF ].

- 02/12/2018

New paper on "Understanding the Limitations of Existing Energy-Efficient Design Approaches for Deep Neural Networks" [ PDF ].

- 06/23/2017

Energy-Aware Pruned DNN Models released here.

- 03/25/2017

Energy estimation website goes live!

Abstract

Deep convolutional neural networks (CNNs) are indispensable to state-of-the-art computer vision algorithms. However, they are still rarely deployed on battery-powered mobile devices, such as smartphones and wearable gadgets, where vision algorithms can enable many revolutionary real-world applications. The key limiting factor is the high energy consumption of CNN processing due to its high computational complexity. While there are many previous efforts that try to reduce the CNN model size or amount of computation, we find that they do not necessarily result in lower energy consumption, and therefore do not serve as a good metric for energy cost estimation.

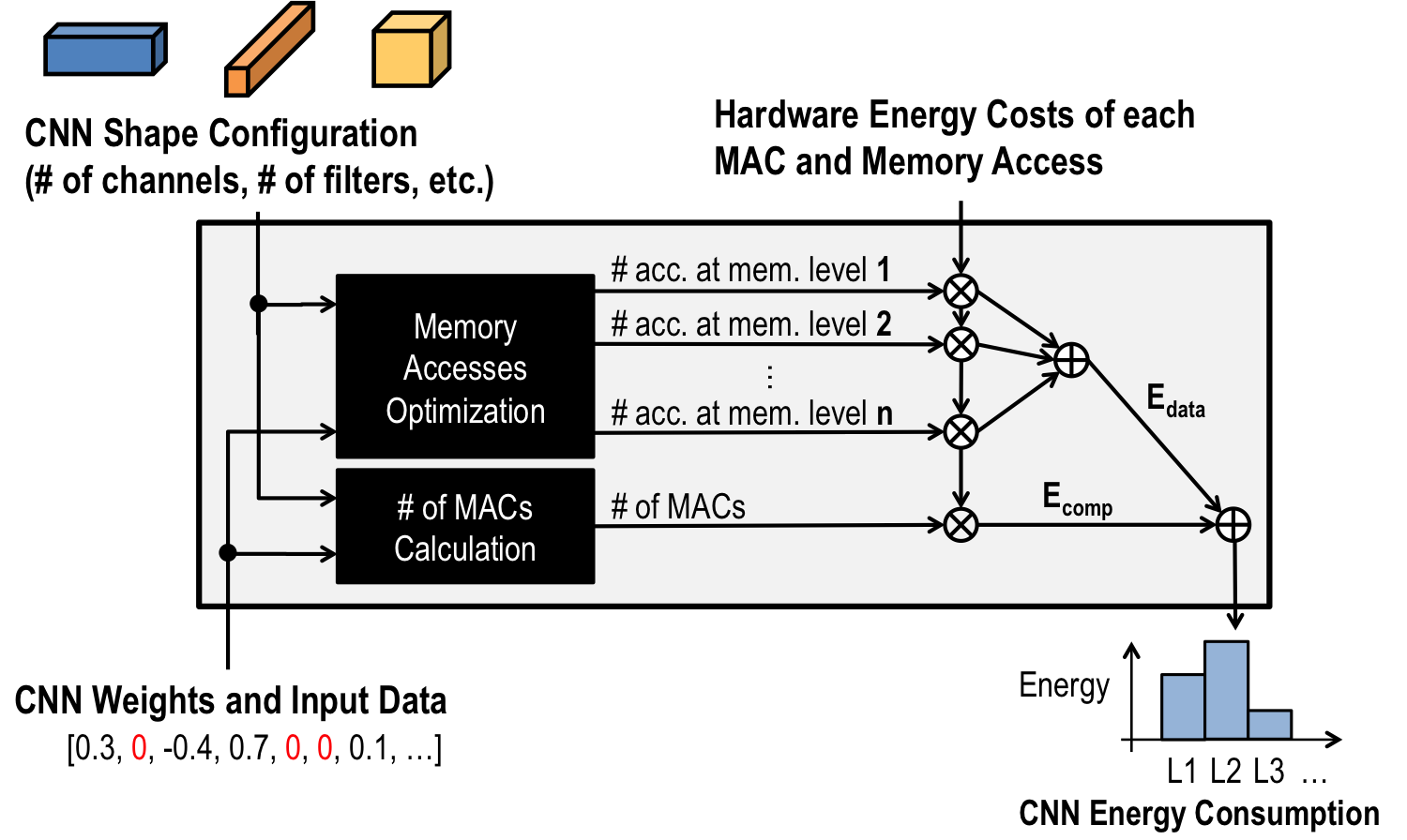

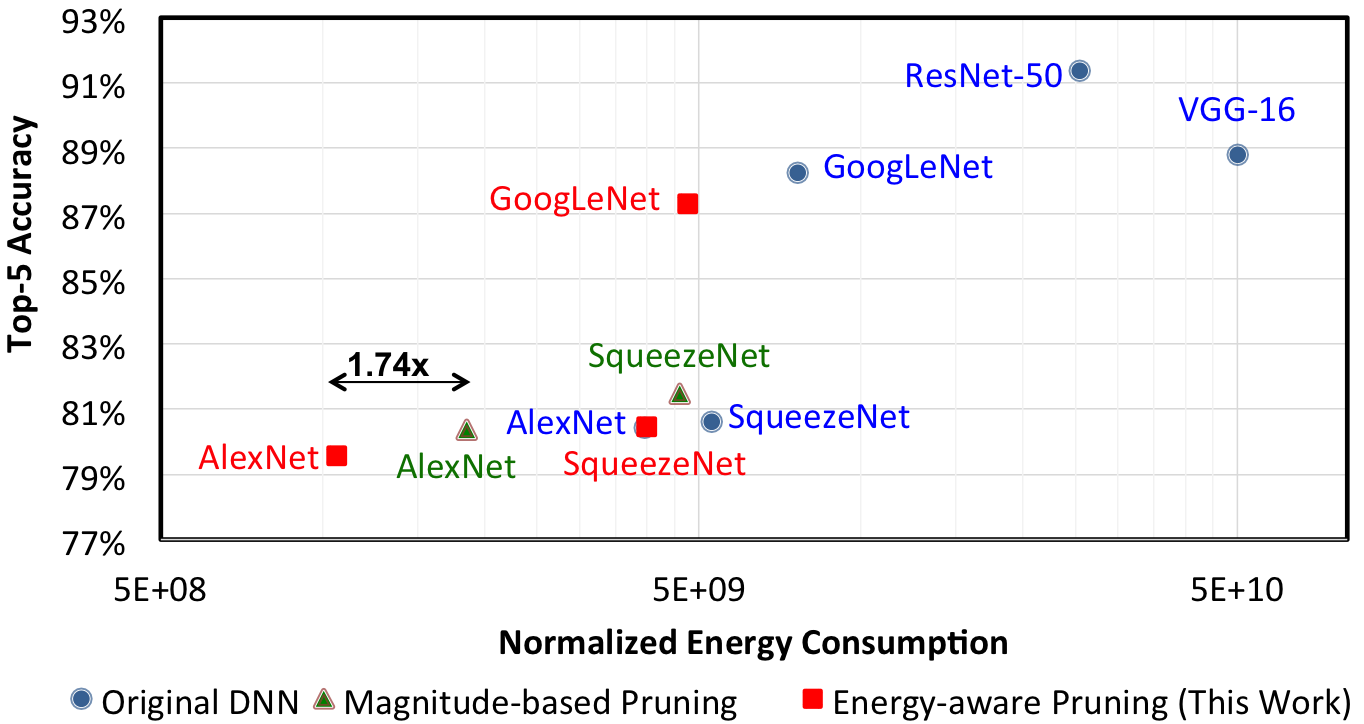

To close the gap between CNN design and energy consumption optimization, we propose an energy-aware pruning algorithm for CNNs that directly uses energy consumption estimation of a CNN to guide the pruning process. The energy estimation methodology uses parameters extrapolated from actual hardware measurements that target realistic battery-powered system setups. The proposed layer-by-layer pruning algorithm also prunes more aggressively than previously proposed pruning methods by minimizing the error in the output feature maps instead of the filter weights. For each layer, the weights are first pruned and then locally fine-tuned with a closed-form least-square solution to quickly restore the accuracy. After all layers are pruned, the entire network is further globally fine-tuned using back-propagation. With the proposed pruning method, the energy consumption of AlexNet and GoogLeNet are reduced by 3.7x and 1.6x, respectively, with less than 1% top-5 accuracy loss. Finally, we show that pruning the AlexNet with a reduced number of target classes can greatly decrease the number of weights but the energy reduction is limited.

Energy Estimation Methodology

Energy versus Accuracy Tradeoff

(Note: Results based on SqueezeNet v.1.0)

Press Coverage

Energy Estimation Tool

BibTeX

@inproceedings{cvpr_2017_yang_energy,

author = {{Yang, Tien-Ju and Chen, Yu-Hsin and Sze, Vivienne}},

title = {{Designing Energy-Efficient Convolutional Neural Networks using Energy-Aware Pruning}},

booktitle = {{IEEE Conference on Computer Vision and Pattern Recognition (CVPR)}},

year = {{2017}},

}

Related Papers

- T.-J. Yang, A. Howard, B. Chen, X. Zhang, A. Go, V. Sze, H. Adam, "NetAdapt: Platform-Aware Neural Network Adaptation for Mobile Applications," European Conference on Computer Vision (ECCV), September 2018. [ paper arXiv ]

- Y.-H. Chen*, T.-J. Yang*, J. Emer, V. Sze, "Understanding the Limitations of Existing Energy-Efficient Design Approaches for Deep Neural Networks," SysML Conference, February 2018. [ paper PDF | talk video ] Selected for Oral Presentation

- V. Sze, T.-J. Yang, Y.-H. Chen, J. Emer, "Efficient Processing of Deep Neural Networks: A Tutorial and Survey," Proceedings of the IEEE, vol. 105, no. 12, pp. 2295-2329, December 2017. [ paper PDF ]

- T.-J. Yang, Y.-H. Chen, J. Emer, V. Sze, "A Method to Estimate the Energy Consumption of Deep Neural Networks," Asilomar Conference on Signals, Systems and Computers, Invited Paper, October 2017. [ paper PDF | slides PDF ]

- T.-J. Yang, Y.-H. Chen, V. Sze, "Designing Energy-Efficient Convolutional Neural Networks using Energy-Aware Pruning," IEEE Conference on Computer Vision and Pattern Recognition (CVPR), July 2017. [ paper arXiv | poster PDF | DNN energy estimation tool LINK | DNN models LINK ] Highlighted in MIT News

- Y.-H. Chen, J. Emer, V. Sze, "Using Dataflow to Optimize Energy Efficiency of Deep Neural Network Accelerators," IEEE Micro's Top Picks from the Computer Architecture Conferences, May/June 2017. [ PDF ]

- A. Suleiman*, Y.-H. Chen*, J. Emer, V. Sze, "Towards Closing the Energy Gap Between HOG and CNN Features for Embedded Vision," IEEE International Symposium of Circuits and Systems (ISCAS), Invited Paper, May 2017. [ paper PDF | slides PDF | talk video ]

- V. Sze, Y.-H. Chen, J. Emer, A. Suleiman, Z. Zhang, "Hardware for Machine Learning: Challenges and Opportunities," IEEE Custom Integrated Circuits Conference (CICC), Invited Paper, May 2017. [ paper arXiv | slides PDF ] Received Best Invited Paper Award

- Y.-H. Chen, T. Krishna, J. Emer, V. Sze, "Eyeriss: An Energy-Efficient Reconfigurable Accelerator for Deep Convolutional Neural Networks," IEEE Journal of Solid State Circuits (JSSC), ISSCC Special Issue, Vol. 52, No. 1, pp. 127-138, January 2017. [ PDF ]

- Y.-H. Chen, J. Emer, V. Sze, "Eyeriss: A Spatial Architecture for Energy-Efficient Dataflow for Convolutional Neural Networks," International Symposium on Computer Architecture (ISCA), pp. 367-379, June 2016. [ paper PDF | slides PDF ] Selected for IEEE Micro’s Top Picks special issue on "most significant papers in computer architecture based on novelty and long-term impact" from 2016

- Y.-H. Chen, T. Krishna, J. Emer, V. Sze, "Eyeriss: An Energy-Efficient Reconfigurable Accelerator for Deep Convolutional Neural Networks," IEEE International Conference on Solid-State Circuits (ISSCC), pp. 262-264, February 2016. [ paper PDF | slides PDF | poster PDF | demo video | project website ] Highlighted in EETimes and MIT News.